Reviewing AWS SSM DHMC - too many acronyms or a useful tool?

Table of Contents

AWS Systems Manager (SSM) is an integral component for managing EC2 and other compute fleets, offering capabilities such as patch management, parameter store, and managing changes across a fleet of servers. It also offers a service called Session Manager allowing secure, audited access to EC2 instances without needing to expose the instances on the Internet.

In February 2023, AWS announced a new solution called Default Host Management Configuration or DHMC, to simply the setup of the core SSM capabilities by providing a method of ensuring that SSM was available for all instances in an account.

Whilst SSM provides many capabilities to simplify the management of EC2 instances, today I’d like to consider it mainly in the context of providing access to EC2 servers, and in the process, review how we access those servers has changed over the years.

If you’d prefer to jump straight to the DHMC info, click here

A brief history lesson

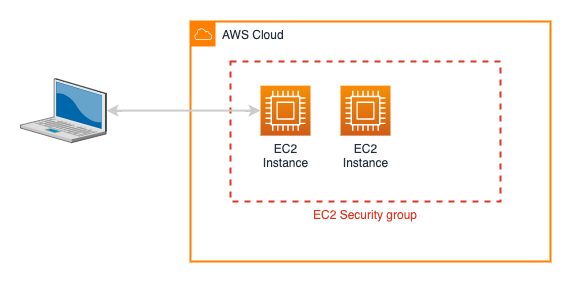

EC2 Classic

In the beginning (or 2006, which for AWS is pretty much the same thing), AWS launched EC2 (or to use its full name of Elastic Compute Cloud). In those days, all of the EC2 instances sat in a flat network space, shared by all customers in what is now called EC2 Classic. Instances were secured through the use of security groups, acting as firewalls, controlling which network ports could access the EC2 virtual servers.

This reliance on security groups meant it wasn’t unusual for a misconfiguration to allow EC2’s to be exposed accidentally, even to the public Internet.

VPCs for the win!

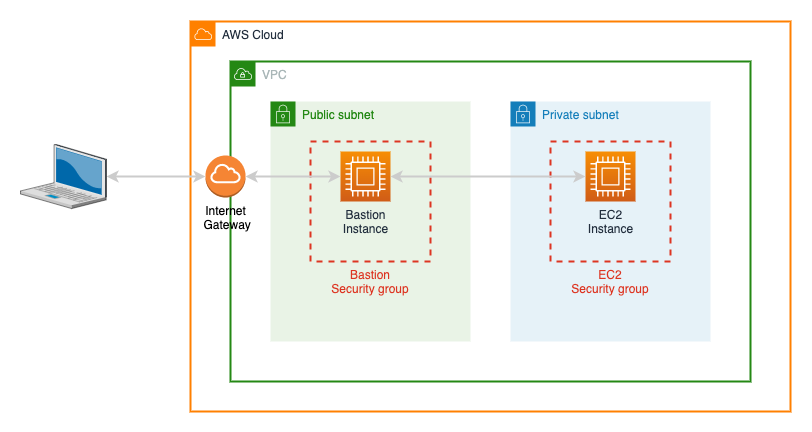

The real sea-change for AWS security came in August 2009, when AWS launched Virtual Private Clouds (VPCs). VPCs allowed customers to create isolated, managed networks with public or private subnets. Public subnets had direct connections to the Internet, whilst private subnets were isolated, providing more security.

However, this meant that it became more complex to access EC2 servers via ssh since they were no longer directly accessible. The standard solution was to create a ‘bastion’ host in a public subnet, which could be used as a jump point to access the servers in private subnets.

In theory, this meant you had to concentrate on making a single server secure, it also meant an extra server to be maintained, patched, etc. and they were often neglected.

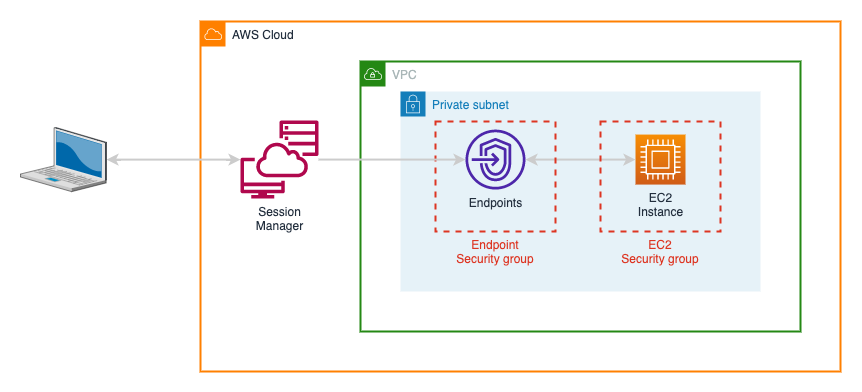

AWS SSM SM - horrible name, great service

After what seemed like an eternity in AWS timescales, AWS Systems Manager or SSM was launched in late 2017. Session Manager followed in 2018, providing one of AWS most tongue-twisting of names, AWS SSM SM, but finally providing a means of accessing EC2 servers via the browser or CLI with no need to expose servers in a public subnet.

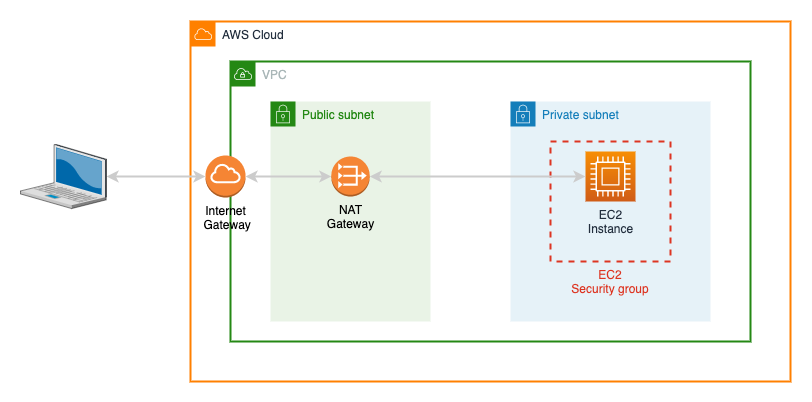

Existing in a private subnet, meant that the EC2 instances did need a means of communicating with AWS API endpoints, either via VPC endpoints

or NAT gateways

Permissions to access the EC2 instances via Session Manager were controlled via the IAM instance profile and associated policies attached to each server, which added a small level of complication to IAM policies and caused sleepless nights for a few (myself included), until the magic combinations were mastered and we remembered to add them to every server we wanted to manage.

DHMC - New kid on the block.

Fast forward to early 2023, and AWS announced new functionality in SSM - Dynamic Host Management Configuration or DHMC.

DHMC allows a single IAM role to be created in AWS, and used by SSM to manage access to all EC2 instances, separating the policies required to allow SSM to work, from those that manage the permissions for the workloads in EC2, such as accessing storage, databases or interacting with other AWS services. This means that all appropriately configured EC2 instances can now be automatically onboarded into, and managed by SSM.

To allow DHMC to be used, some pre-requisites must be met

- An IAM role with appropriate policies, either the AWS Managed Policy

AmazonSSMManagedEC2InstanceDefaultPolicyor a custom one based upon it - EC2 instances using

- SSM Agent v3.2.582.0 or later.

- Instance Metadata Service Version 2

- Network access allowing communication between the EC2 instances and SSM services which means either

- An EC2 security group allowing outgoing HTTPS access to be applied to the EC2 isntances

- Instances in a public subnet with public IP, or

- Private subnet instance and NAT Gateway or,

- Private subnet instance and appropriate VPC Endpoints - EC2, EC2Messages, SSM and SSMMessages

Example of enabling DHMC

As an example of how to enable and test DHMC, I’ll provide some Terraform code. This code assumes a VPC configuration is in place with public and private subnets, a NAT Gateway in the public subnet, and appropriate routing tables.

Let’s start by creating a standard configuration, then we’ll enable DHMC.

First, we’ll create an IAM role and an instance profile which we can use to attach to the EC2 instances. In this case, we’ll just add some simple permissions to query S3 buckets:

resource "aws_iam_role" "standard_ec2_role" {

name_prefix = "standard_ec2_role"

description = "IAM Role for EC2 use"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Effect = "Allow"

Sid = ""

Principal = {

Service = [

"ec2.amazonaws.com"

]

}

},

]

})

}

resource "aws_iam_policy" "s3_access_policy" {

name = "ssm_s3_access_policy"

path = "/"

description = "List S3 buckets and objects"

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = [

"s3:ListAllMyBuckets",

"s3:ListBucket",

"s3:GetObject"

],

Effect = "Allow"

Sid = "S3Listing"

Resource = [

"arn:aws:s3:::*",

"arn:aws:s3:::*/*"

]

}

]

})

}

resource "aws_iam_role_policy_attachment" "s3_policy" {

role = aws_iam_role.standard_ec2_role.name

policy_arn = aws_iam_policy.s3_access_policy.arn

}

resource "aws_iam_instance_profile" "ec2_ssm_role_profile" {

name_prefix = "ec2_demo_profile"

role = aws_iam_role.standard_ec2_role.name

}

Now, let’s create a security group allowing outgoing access on HTTPS which we’ll use to allow the EC2 instances to work with SSM

resource "aws_security_group" "ec2_security_group" {

name_prefix = "ec2_ssm_test_sg"

description = "Security Group for building ec2s to test SSM roles"

vpc_id = aws_vpc.vpc.id

}

resource "aws_security_group_rule" "ec2_outgoing" {

security_group_id = aws_security_group.ec2_security_group.id

type = "egress"

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

And finally, let’s create an EC2 instance using the above items. We’ll use the latest Amazon Linux 2023 AMI, and update the installed SSM Agent

data "aws_ami" "amazon-linux-2023" {

most_recent = true

owners = ["amazon"]

filter {

name = "name"

values = ["al2023-ami-2023.0.20230419.0-kernel-6.1-x86_64"]

}

}

resource "aws_instance" "ec2_instance" {

ami = data.aws_ami.amazon-linux-2023.id

instance_type = var.instance_type

iam_instance_profile = aws_iam_instance_profile.ec2_ssm_role_profile.name

vpc_security_group_ids = [aws_security_group.ec2_security_group.id]

subnet_id = aws_subnet.private_subnet.id

user_data = <<-EOF

#! /bin/bash

sudo yum install -y https://s3.amazonaws.com/ec2-downloads-windows/SSMAgent/latest/linux_amd64/amazon-ssm-agent.rpm

sudo systemctl restart amazon-ssm-agent

EOF

user_data_replace_on_change = true

tags = {

Name = "std_ssm_instance"

}

}

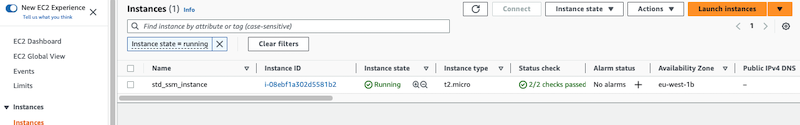

If we deploy the above configuration, and then use the AWS Console to review the EC2 instance created, we’ll see something like:

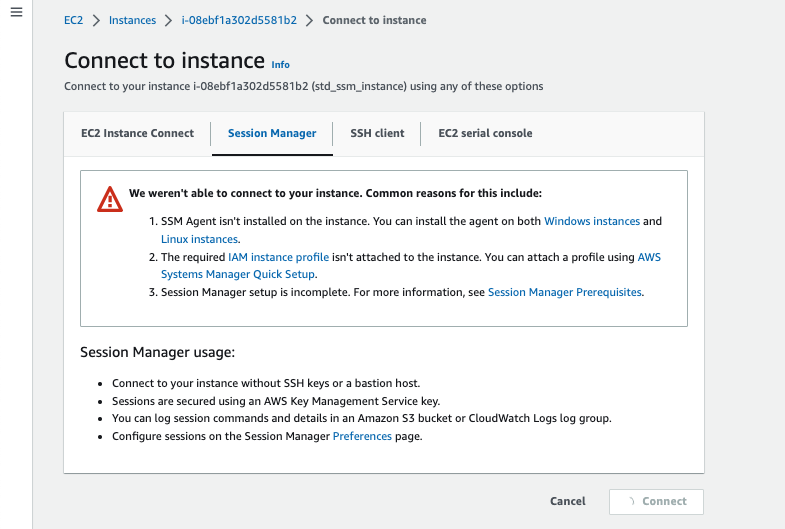

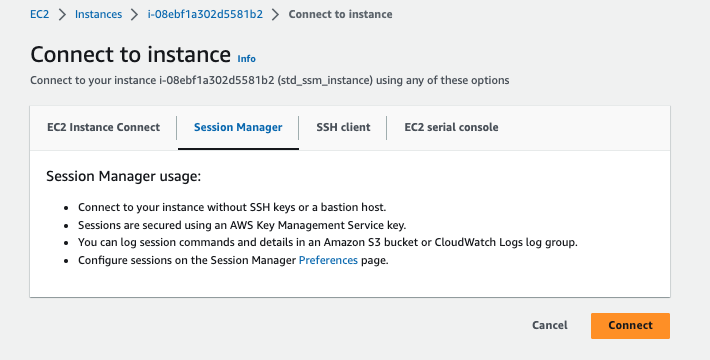

but if we try and use Session Manager to connect to it, by selecting the instance and then clicking on the Connect button in the console, we’ll see something like

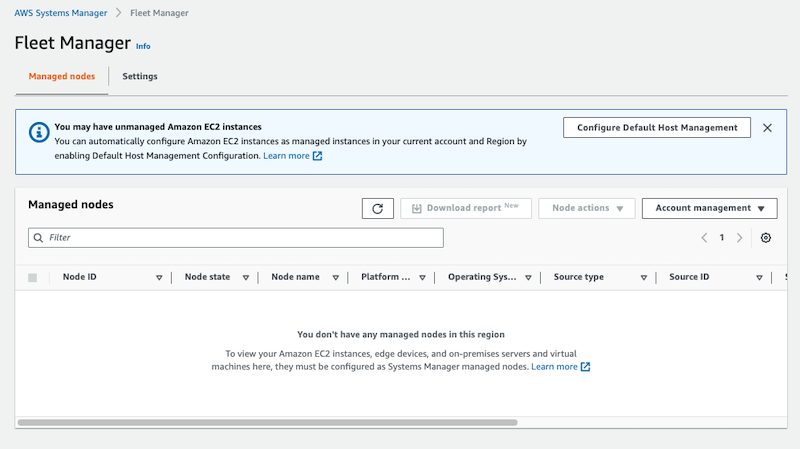

and finally, if we check Fleet Manager in the SSM console, we’ll see something like:

Now to enable DHMC, let’s first create a role that we can use to allow DHMC to manage the EC2 instance.

resource "aws_iam_role" "ssm_dhmc_role" {

name_prefix = "ssm_dhmc_role"

description = "IAM Role allowing dhmc access"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Effect = "Allow"

Sid = ""

Principal = {

Service = [

"ssm.amazonaws.com"

]

}

},

]

})

}

resource "aws_iam_role_policy_attachment" "ssm_dhmc_policy" {

role = aws_iam_role.ssm_dhmc_role.name

policy_arn = "arn:aws:iam::aws:policy/AmazonSSMManagedEC2InstanceDefaultPolicy"

}

and then allow SSM to use that role to enable DHMC

resource "aws_ssm_service_setting" "test_setting" {

setting_id = "arn:aws:ssm:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:servicesetting/ssm/managed-instance/default-ec2-instance-management-role"

setting_value = aws_iam_role.ssm_dhmc_role.name

}

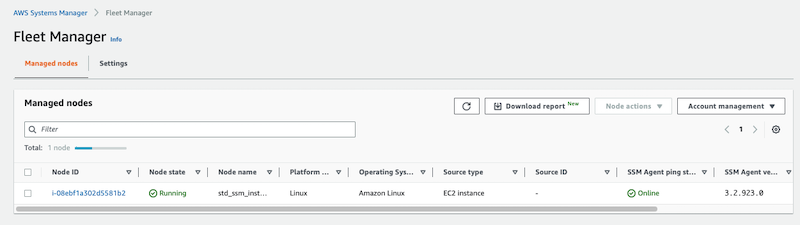

Now if we check the Fleet Manager console, we should see that we can now see the instance available for management via SSM:

And if we try to connect via the EC2 console, we should see that the connect option is now available

Conclusion - Is DHMC worthwhile?

Of course, whether to use DHMC is down to your circumstances, but personally whilst there is a little overhead in the configuration currently, it’s worthwhile for the following reasons

- Rather than having to enable SSM management on an instance by instance case, we can now manage all EC2 instances in an account, with a single role needing to be configured.

- With SSM enabled, we can carry out tasks such as

- Installing security updates or patching

- Running compliance checks

- As described above, we can provide access to the EC2 instances without the need for ssh, or even configuring keys.

- The use of the DHMC role means we can remove the SSM specific policies from our instances, simplifying their configuration.

There are some minor complications such as ensuring the systems have the correct version of the SSM agent, but this is trival compared to the improvements possible.